- Home & Kitchen

-

Mobile & Computers

- Headsets & Microphones

- Computer Speakers

- System Cooling

- Computer Game Controllers

- Computer Keyboards

- Network Hub & Switches

- Networking Products

- Cable, DSL & Wireless Modems

- NIC

- MP3 & Media Players

- CPUs & Computer Processor Upgrades

- Graphics Cards

- CD & DVD Drives

- RAM & Memory Upgrades

- More Mobile & Computers Categories

- Appliances & Electronics

- Pets

- Fashion

- AutoMobiles & Tools

- Sports

-

Beauty & Health

- Swimming Pools & Spas

- Winter Recreation

- Yoga & Pilates Equipment

- Weight Machines

- Cardio Equipment Accessories

- Free Weight Equipment

- Equestrian Clothing & Equipment

- Vision Care

- Lab Supplies & Equipment

- Medical & Orthopedic Supplies

- Biometric Monitors

- Vitamins & Nutrition

- Dental Supplies

- Oral Irrigators

- More Beauty & Health Categories

- Baby & Toys Products

- Jewelry

- Food & Grocery

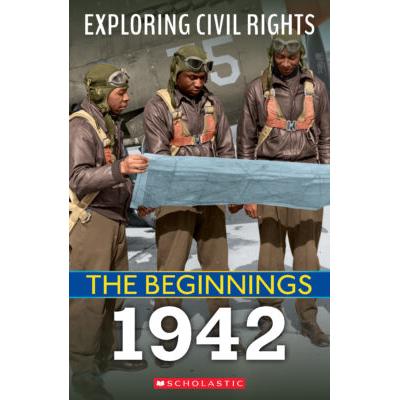

- Books

-

Miscellaneous

- Miscellaneous Storage Peripherals

- Miscellaneous Software

- Miscellaneous Men's Accessories

- Miscellaneous Women's Accessories

- Halloween Props & Effects

- Halloween Makeup & Prosthetics

- Miscellaneous Toys & Games

- Miscellaneous Gifts, Flowers & Food

- Miscellaneous Appliances

- Miscellaneous

- Miscellaneous Fiction Books

- Miscellaneous Books

- Miscellaneous Automotive

- Miscellaneous DVDs & Videos

- More Miscellaneous Categories

- Blog